BTS 'Love Yourself: Speak Yourself' World Tour

Live Events

Music

Innovative tour utilises Disguise workflow solutions and powerful Disguise hardware to power largest touring AR content.

Boy band BTS have sold over 15 million albums worldwide, making them South Korea’s best selling artist of all time. They are continuing their world domination by thrilling fans around the globe with their ‘Love Yourself: Speak Yourself’ World tour.

The 20-date tour is a stadium extension of the band’s successful headlining tour that began in 2018 and has been very well received, with the London Wembley Stadium dates selling out within two minutes of tickets being released. The innovative production uses Disguise gx 2 media servers to drive video content, integrate generative content and render AR content.

Management company Big Hit Entertainment, creative directors Plan A and production designers FragmentNine conceived the tour as a big festival with every song on the setlist designed to communicate with fans, known collectively as the ARMY. Performances include inflatables, a water cannon, gun powder and fireworks as well as audience participation with handheld ARMY BOMB sticks. For the song “Trivia: Love” a mysterious mood is created by using live AR, a first for a live concert.

LIVE-LAB Co., Ltd. (Lighting and Visual Expression Laboratory) recommended using Disguise for the tour “because of its flexibility and stability in coping with changing variables during the long tour,” says CEO Alvin Chu. LIVE-LAB is a Disguise studio partner based in Seoul.

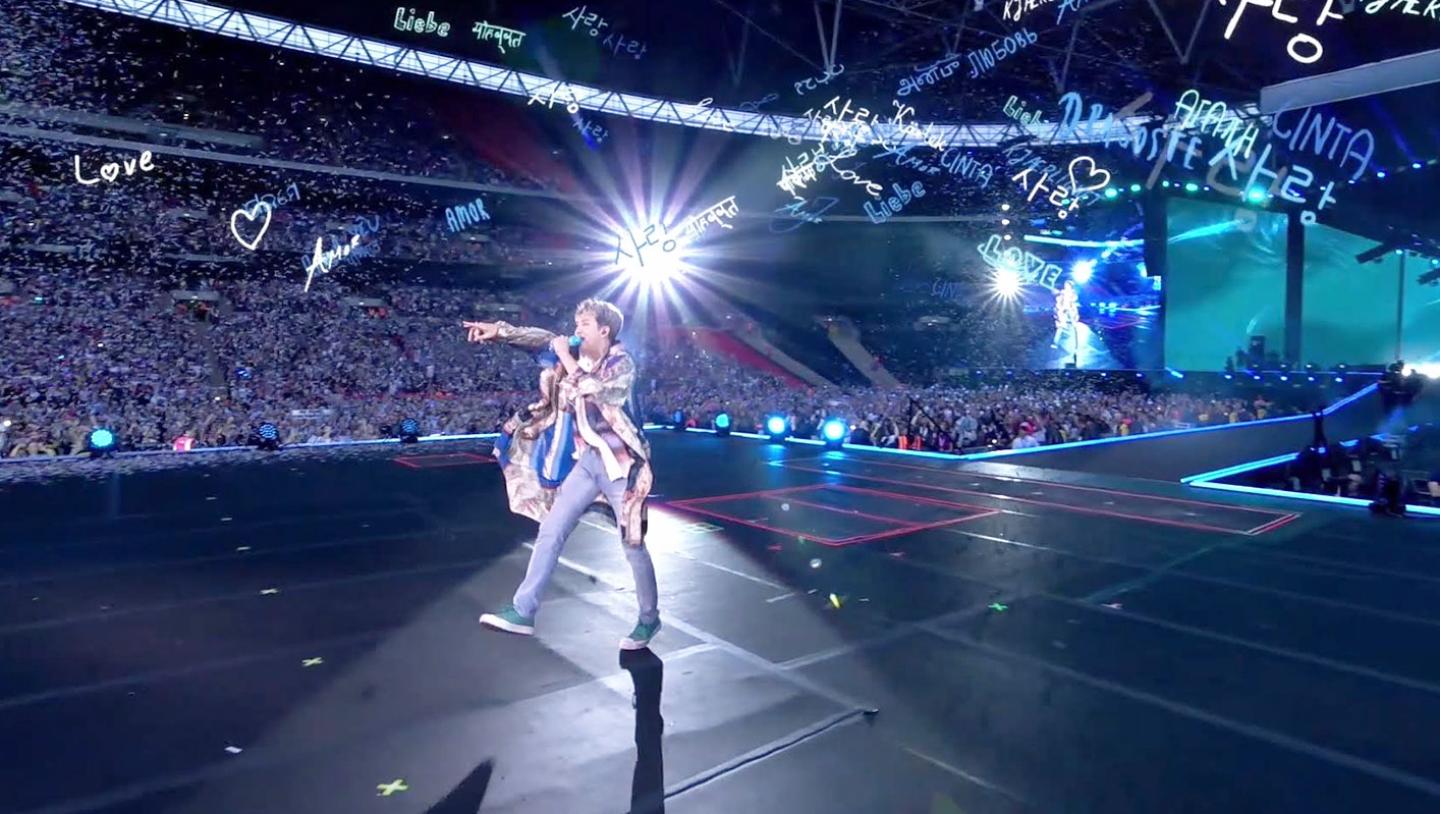

AOIN were brought on board to produce screen content for four of the band’s songs, select interstitials, Notch IMAG effects and AR content for the song, ‘Trivia: Love.’ The set features words and shapes, including a heart motif, floating across the stage and over the audience as the band interacts with the elements.

Two gx 2 media servers were deployed as master and understudy for the AR content. The server handles two camera inputs and eight outputs and exports PGM for songs with high-resolution video content and Notch effects. The servers used 4x SDI VFC cards to effectively interface with the existing broadcast infrastructure.

Born out of conversations between Big Hit, Plan A, and FragmentNine came the idea of using Augmented Reality for Trivia: Love. The team had some reference videos that were a compelling jumping off point, but AR had barely found its way into the entertainment industry.

Tasked with turning these napkin ideas into reality, FragmentNine, consisting of principal designers Jeremy Lechterman and Jackson Gallagher, reached out to their long-time associates and friends at All of it Now (AOIN) to begin exploring possibilities.

Over the following weeks, several ideas bounced around between everyone until they ultimately homed in on the style and progression of the song. The AR content ultimately consisted of words, shapes, and a heart motif that the artist could interact with.

In production rehearsals, AOIN constructed a half scale model of the stage to test out AR cameras and motion tracking since this wasn’t just an idea that could come off the shelf, it needed to be workshopped. This also allowed FragmentNine, Plan A, and AOIN to make revisions to the content together before full on-site rehearsals.

AOIN were tasked with integrating AR into the stadium-scale tour using the existing camera and video system, which was no small feat. The tour is believed to be the first to use AR on a global multidate stadium tour.

“Creatively we needed to help the artists, management and production understand what AR content entailed and how it would look,” explains Kevin Zhu, one of AOIN’s AR Content Designers. “This involved a lot of discussion and client education on the variables that impact the end product: lighting, size/scale, colour, front plate compositing and more. This kind of discussion was necessary because the technology and implementation of AR graphics was so new to the major stakeholders. Ultimately, it was a good learning experience all around, and we will be taking some of those lessons forward into projects.”

On the technical side, AOIN had to overcome the unknowns associated with the integration of AR workflows, notes Producer and AR Content Designer, Berto Mora. “We have done many tests within our studio where everything works in a controlled environment, but when you are on-site you have to deal with real-world issues. Since a lot of this technology is relatively new, we have to deal with a lot of unknown issues and unwritten features. Working closely with our partners, such as Disguise, allows us to accomplish what we need to make these workflows operational in the real world.”

According to AOIN Executive Producer Danny Firpo, “Understanding the performance on a stadium scale was an important factor. The sheer scale of a stadium setting meant that the performer was almost too small to see in person except for those in the front row. A significant percentage of the audience would experience the show and watch the performer through the IMAG screens with the AR effects.”

The Disguise workflow was vital in making creative content decisions without having the full AR rig set up. Firpo explains, “We used the spatial mapping feature on the gx 2 pretty heavily, which allowed us to virtually block out all the camera positions and AR effects on stage from within our studio. Management could approve the changes and revisions remotely using Disguise’s stage render video as a virtual proxy of the actual performance.”

The integration of Disguise with the stYpe camera tracking system allowed AOIN to link virtual camera objects in Disguise’s software GUI with real-time lens and 6D positional data the stYpe unit received from a camera and broadcasting output. Stype’s Redspy system was used for the handheld camera, and a Vinten Tracking head was used for tracking a stationary camera at the FOH camera riser position.

We had to make a virtual stage line up perfectly with the real stage, and we were only able to see this alignment through the live camera feed into the gx 2...Disguise became a powerful real-time compositing tool that helped us understand adjustments we needed to make for perspective and position

Touring AR Engineer

"The virtual stage assets that FragmentNine delivered to us for the project were scaled perfectly for the real-world space. Being able to verify scale within the Disguise software made aligning the real world and the virtual world much simpler,” explains Touring AR Engineer Neil Carman.

Once Disguise had all the connected Stype systems agree on a universal zero point, from which all graphics would emanate, the server allowed AOIN to precisely move around individual elements of the graphics from different sections during rehearsals. This aided AOIN in choreographing interactive movements between the performers and the graphics. “The stability of the hardware and flexibility of the software on the Disguise server contributes greatly to the success of a long tour,” says LIVE-LAB’s Chu.

“Controlling the image projected on the large LED screen is important for the overall harmony of the elements, and Disguise shows stable and powerful operating capabilities,” adds Creative Director Kevin Kim of Plan A.